What is Big Data Science?

So What is Big Data?

In this post we will be answering the question “what is BIG DATA SCIENCE? ” If you are a student of Computer Science, or maybe you are interested in the impact that Big Data is having on information technology, then this article will certainly help.

Big Data is the term used to describe the enormous quantities of data generated and captured at amazingly high speeds, and that is unstructured. As an example, if we look at any popular social media platform like Facebook or Instagram, we can see that it deals with hundreds ( even thousands or millions) of pieces of content including photos, messages, posts, and video streams that ist users produce every minute! This means that massive amounts of data are generated, stored and processed, and this is done at a very high speed! The nature of the data, such as text, images, and video, is so diverse, and is often unstructured, which makes processing the data quite a challenge for traditional methods.

Therefore when we say big we mean multiples of ZettaBytes!

As you can see from the slide, 1 zetabyte = 1 trillion gigabytes.

A zettabyte is a digital unit of measurement. One zettabyte is equal to one sextillion bytes or 1021 (1,000,000,000,000,000,000,000) bytes, or, one zettabyte is equal to a trillion gigabytes.[4][2] To put this into perspective, consider that “if each terabyte in a zettabyte were a kilometre, it would be equivalent to 1,300 round trips to the moon and back (768,800 kilometers)”.[4] Or, as former Google CEO Eric Schmidt puts it, from the very beginning of humanity to the year 2003, an estimated 5 exabytes of information was created,[9] which corresponds to 0.5% of a zettabyte. In 2013, that amount of information (5 exabytes) took only two days to create, and that pace is continuously growing.[9]

Above we mentioned two social media platforms, and the data that is generated by it users. In the slide below we see further examples of social media platforms and the data they generate :

If you can imagine that with Amazon Sales of $250,000 per minute, Google Searches of 3.5 million per minute, and 4 Million videos are watched on Youtube every minute, then you can imagine the amount of data that is being generated just by those three companies, and these figures were in taken in 2013! Wow!

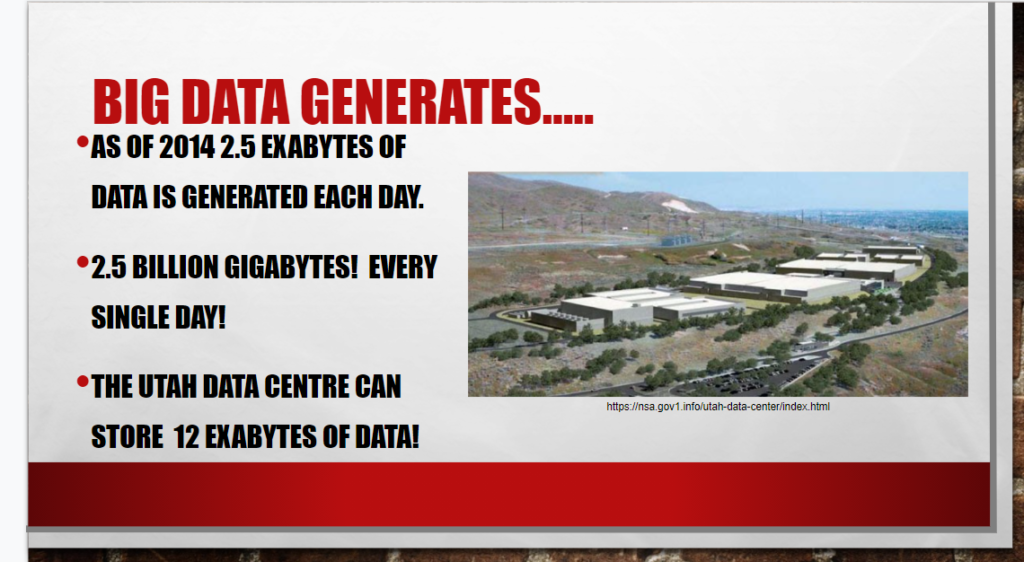

So Looking at the slide below, we see that this massive Utah Based data centre generates 2.5 EXABYTES of Data every day! That is 2.5 billion gigabytes every day! These data centres are masive places as because the data load, and the computers that store the data have to be cooled and serviced/maintained, so there is a big job to be done to help support the massive amount of data being generated world wide!

What are the Big Data Applications

The Applications of Big Data are many and varied. With Big data we can now collect the data necessary to tell us some of the important questions that concern mankind.

For example, we will now be able to collect the data needed to determine if the radiation from cell phones cause cancer, and if they do, under what circumstances. Information like this will help cell phone users to find ways to use their devices in a safe way, and may even help the development of new devices that will contribute to user safety.

Another Application is the collection of data from users on an online course. This data will be excellent in analysing the course, and deciding how to improve the course. This is a great way to help improve the numbers of students taking the course, and even finishing it! Many times a student will buy a course online, and never complete it, thereby not getting the benefit of the study. Improving the course in the identified areas of concern will certainly help to engage students for longer.

Another interesting example of the use of big data is in the healthcare industry. For example President Obama came up with a program called the Cancer Moonshot Program, aimed at the goal of accomplishing 10 years of progress in the search for a cure, in half the tine.

Medical researchers can use large amounts of data on treatment plans and recovery rates of cancer patients in order to find trends and treatments that have the highest rates of success in the real world. For example, researchers can examine tumor samples in biobanks that are linked up with patient treatment records. Using this data, researchers can see things like how certain mutations and cancer proteins interact with different treatments and find trends that will lead to better patient outcomes.

https://www.datapine.com/blog/big-data-examples-in-healthcare

So Big Data is……..

Big Data is data that cannot be processed or analysed by traditional means. For example :

- the nature of the data means that it cannot fit onto a traditional computer server.

- the make and nature of the data is very diverse, meaning it consists of text, video and images.

- the production of the data is at very high speed, for example, big data makes use of streaming data, particularly video.

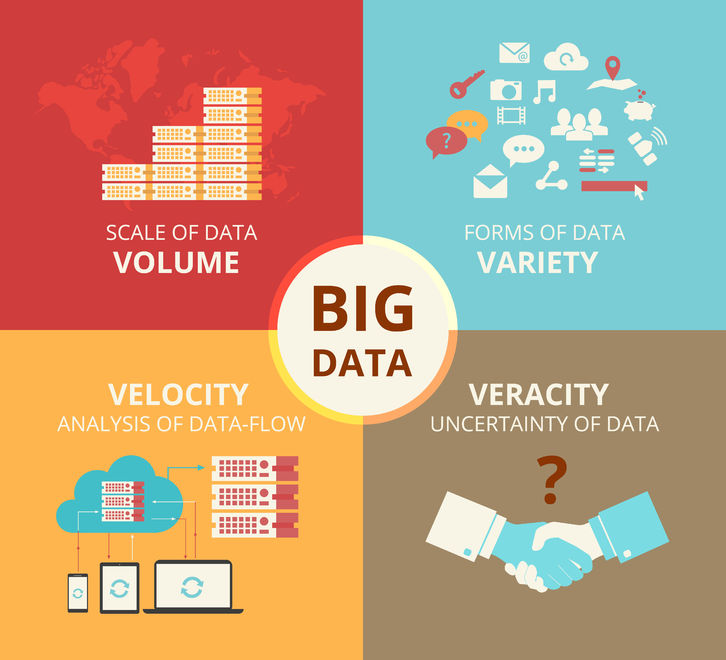

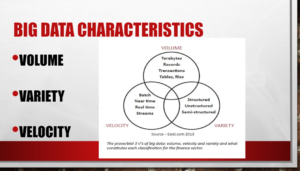

Therefore, the 3 defining factors of big data are :

- Volume – This is the measure of the size of the records, transactions, tables and files.

- Variety – This refers to the make up of the data, whether it is structured, unstructured, or semi-structured

- Velocity – This refers to the speed of processing the data, using batch processing, near time or real time processing, or streaming data.

What Is Big Data Volume?

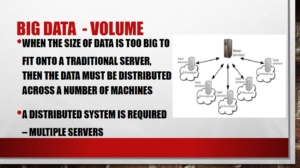

Because of the volume of the data, non-traditional means must be employed to save and store the data. Because big data cannot use traditional servers alone, it must use a distributed system. This is a system of multiple interlinked servers in different locations, that have the capability of storing the data. The data will be distributed across these servers, rather than attempting to sit on one designated server.

You will see from the diagram above that the data will be distributed on a number of super processors, each having a number of processors , and all of which are connected to a master server for control.

For Example, a normal relational database does not have the capability of handling a large dataset. the dataset must be broken down and the data spread across a network of computers in a distributed database system. The data can then be processed to produce information.

Data manipulation is significantly more difficult when dealing with data that does not follow a specific format. To overcome this, machine learning techniques are used to identify patterns in the gathered data and generate models that, when applied to incoming, streaming data, can extract valuable information and make data-driven predictions. For example, machine learning algorithms can be used to analyse customer online habits in order to make ‘intelligent’ product recommendations or identify fraudulent activity.

What is data Variety?

With data variety, the lack of structure of big data means it is difficult to store, process analyse the data. Traditionally data is organised in relational databases that accept data based on a predetermined model with tables, fields, and relations. This type of data is considered structured as it follows a strict, predefined format.

With Big data you cannot use the traditional rows and columns approach of a relational database because the data is so varied, and cannot fit into a traditional structure.

For example, the devices that data can be collected from can be :

- Networked sensors – particularly useful in securing a property, or ensuring the temperature does not rise above a certain threshold.

- Video Surveillance – In some cases, video can be submitted as evidence in a crime, so this type of data may need to be kept for a number of years. For example, a modern home security camera constantly processes data to send notifications to connected smartphones when motion is detected near the house.

- You are probably a smart phone user yourself, so will be aware of the many different apps you may use, and the data that each app generates. Just on using an app like WhatsApp, if you don’t have the settings right, you will find your phone clogged with unwanted videos from all over the world, and your phone can soon grind to a halt!!

- Mouse clicks form part of the data being transmitted when you want to monitor a network. This means that the data generated can be stored for forensic analysis if required, where a cyber security breach has occurred.

What is Big Data Velocity?

In Big Data, velocity refers to whether data is in motion or at rest. If data is stored, then it is at rest. If the data is being streamed, then it has motion, and it will have a velocity.

Big data requires a new breed of networking technology to handle changes in data, and then to transmit them accordingly.

To support efficient networking for big data, big data’s features such as volume, velocity, and variety should be accommodated by 5G wireless networks. Big data volume requires great network capacity and that capacity can be made available by use and re-use of spectrums. Spectrums help to boost network capacity. So, adding more spectrums can boost network capacity which enables the 5G to exchange huge amounts of data.

The following Article explains the use of Big Data and its requirement for appropriate networking technologies :

https://medium.com/the-research-nest/big-data-driven-networking-explained-1a7797c9ba56

In Conclusion

Therefore, the 3 defining factors of big data are :

- Volume – This is the measure of the size of the records, transactions, tables and files.

- Variety – This refers to the make up of the data, whether it is structured, unstructured, or semi-structured

- Velocity – This refers to the speed of processing the data, using batch processing, near time or real time processing, or streaming data.

In this post on Big Data, we have seen how Big Data can utilize 5G networks for its usage and what are the basic factors that are enabling this interaction. With Big Data and networking combined, the world of information exchange is changing, and we are developing an efficient way of dealing with the vast amounts of generated data in an efficient manner.